Ces dernières années, le monde des sextoys a connu un changement révolutionnaire avec l’avènement de la technologie d’impression 3D. Ces appareils de pointe offrent des designs uniques et des options personnalisables, répondant à un large éventail de préférences, en particulier parmi les porno jeune stars et fans.

Avez-vous déjà été époustouflé par un costume de cosplay lors d’une convention ? Ces pièces d’armure méticuleusement conçues, ces armes fantastiques et ces gadgets d’un autre monde ?

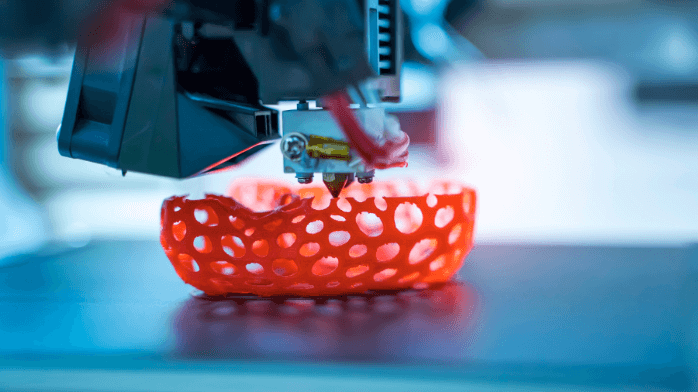

Et si nous vous disions que créer vous-même des éléments de cosplay époustouflants est non seulement possible, mais également réalisable avec un budget limité ? C’est la magie de l’impression 3D pour le cosplay !

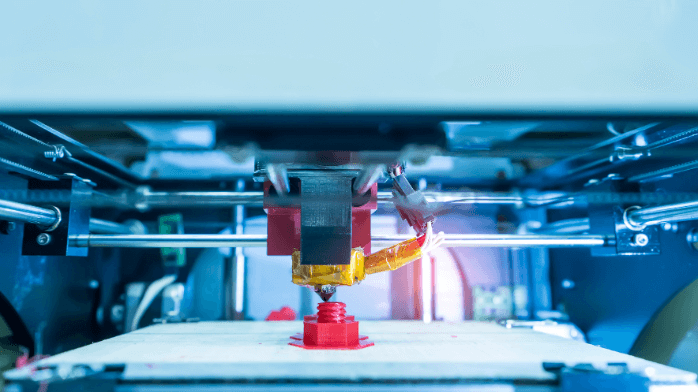

Bienvenue dans le monde de l’impression 3D, où la créativité ne connaît pas de limites ! Ces dernières années, l’impression 3D a acquis une immense popularité, permettant aux individus de donner vie à leurs idées, de personnaliser des objets et même d’explorer de nouveaux horizons en matière d’éducation.

Ce guide est votre passeport pour le domaine fascinant de l’impression 3D, conçu pour les débutants souhaitant se lancer dans ce voyage passionnant dans le confort de leur foyer.

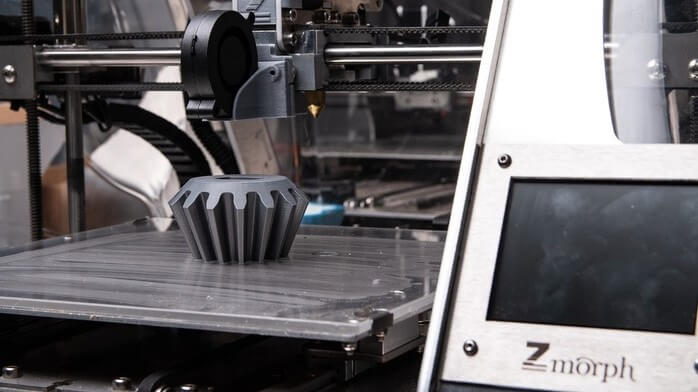

À une époque où la durabilité environnementale est de la plus haute importance, l’adoption de la technologie de l’impression 3D, également connue sous le nom de fabrication additive, a changé la donne. En révolutionnant les processus de fabrication traditionnels, l’impression 3D permet de créer des objets précis en déposant des matériaux couche par couche, ce qui présente de nombreux avantages pour l’environnement. Cet article explore les avantages profonds de l’impression 3D et la positionne comme un moteur essentiel d’un avenir plus durable.

Depuis, des solutions esthétiques imprimées en 3D ont été développées pour aider ces femmes à se sentir mieux dans leur corps après avoir subi une mastectomie. En fait, beaucoup de femmes sur les sites porno gratuit utilisent déjà ces solutions et tu ne l’as peut-être pas remarqué.

L’impression 3D peut sembler difficile si vous êtes un débutant complet sans expérience, surtout lorsqu’il s’agit d’utiliser un logiciel 3D. Cela peut amener les gens

Parfaits pour les travaux manuels de Noël, ces objets en 3D sont faciles et rapides à réaliser en quelques étapes ! Tout ce dont vous